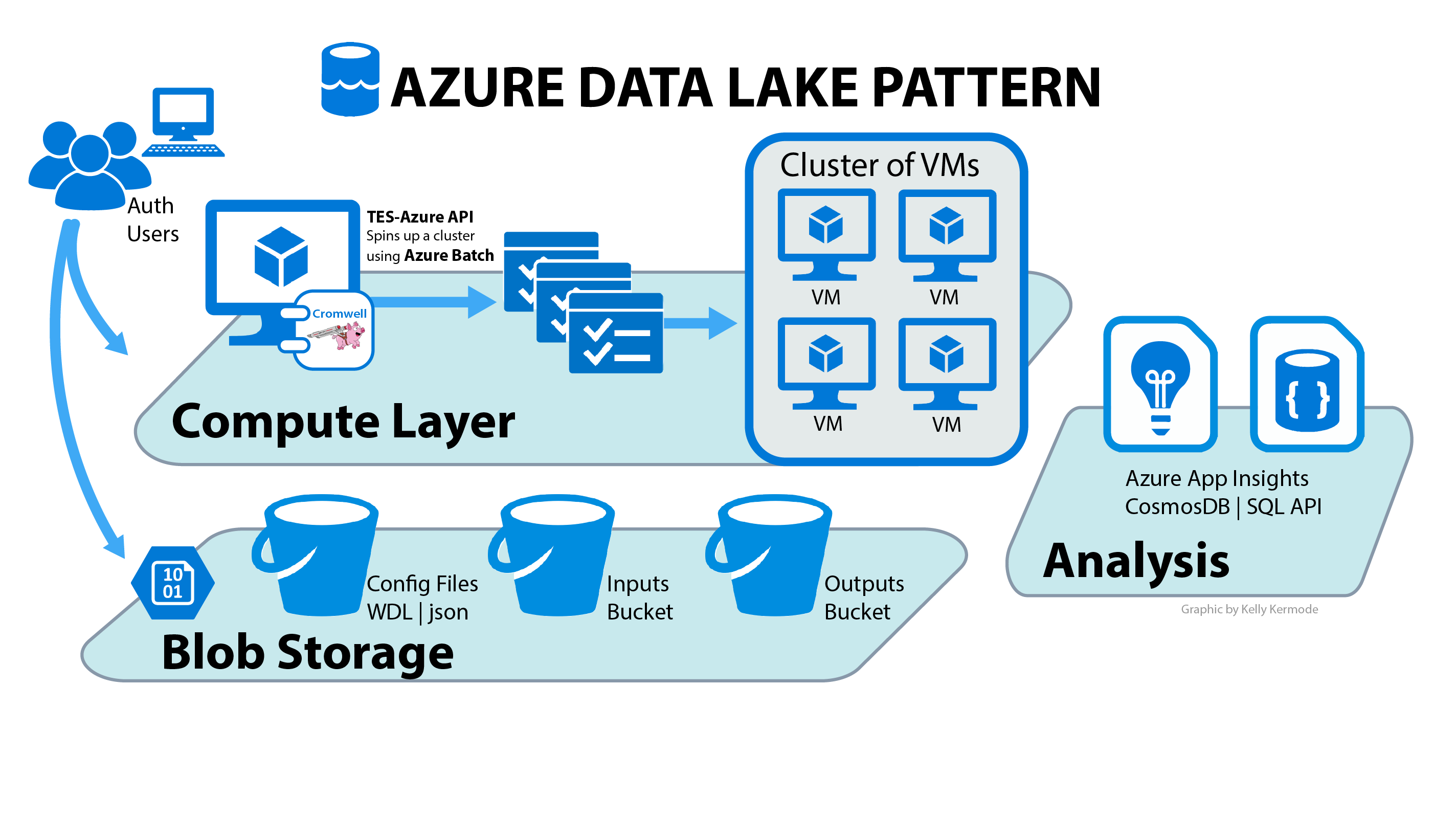

The most important feature of data lake analytics is its ability to process unstructured data by applying schema on reading logic which imposes a structure on the data as you.

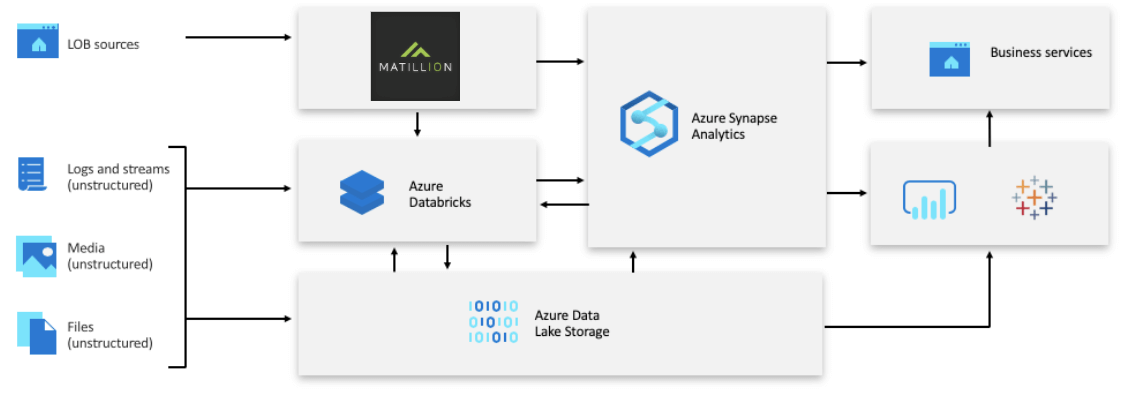

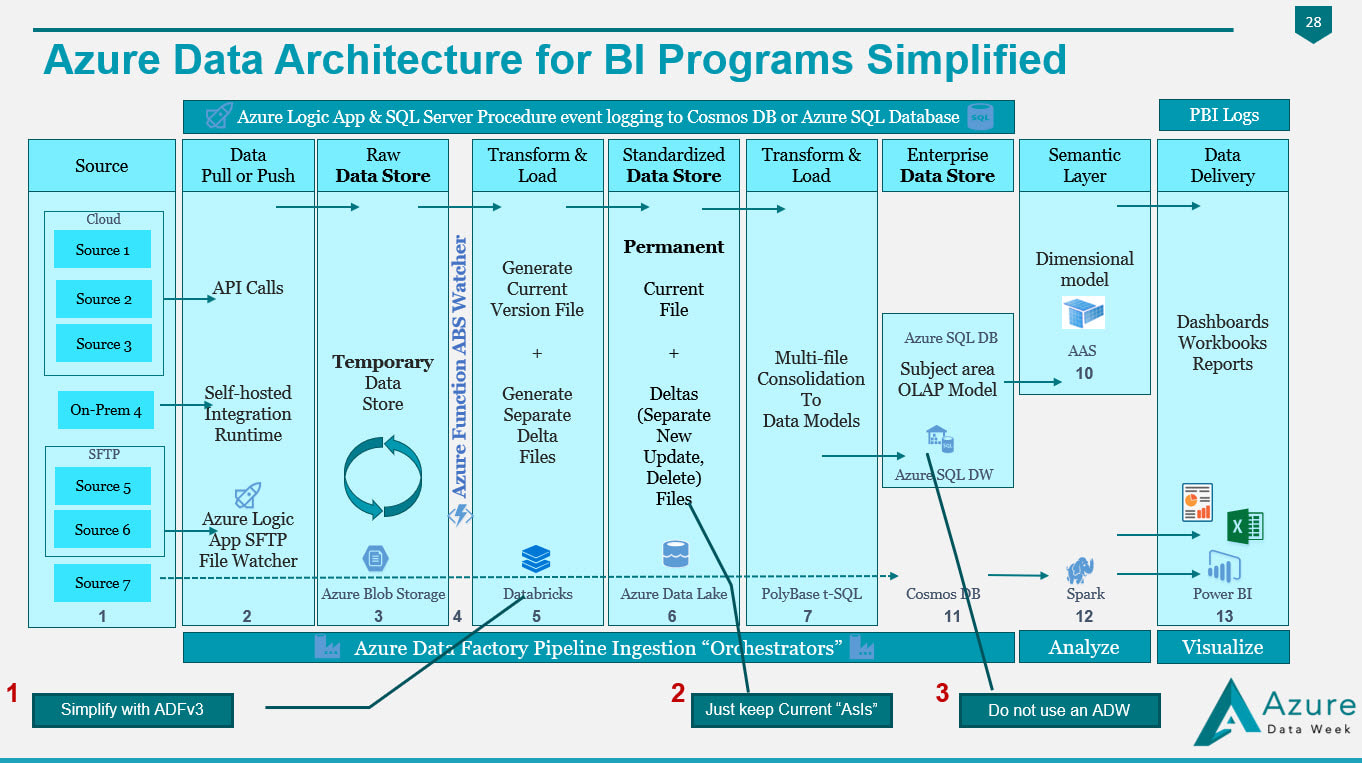

Azure data lake architecture include which of the below components.

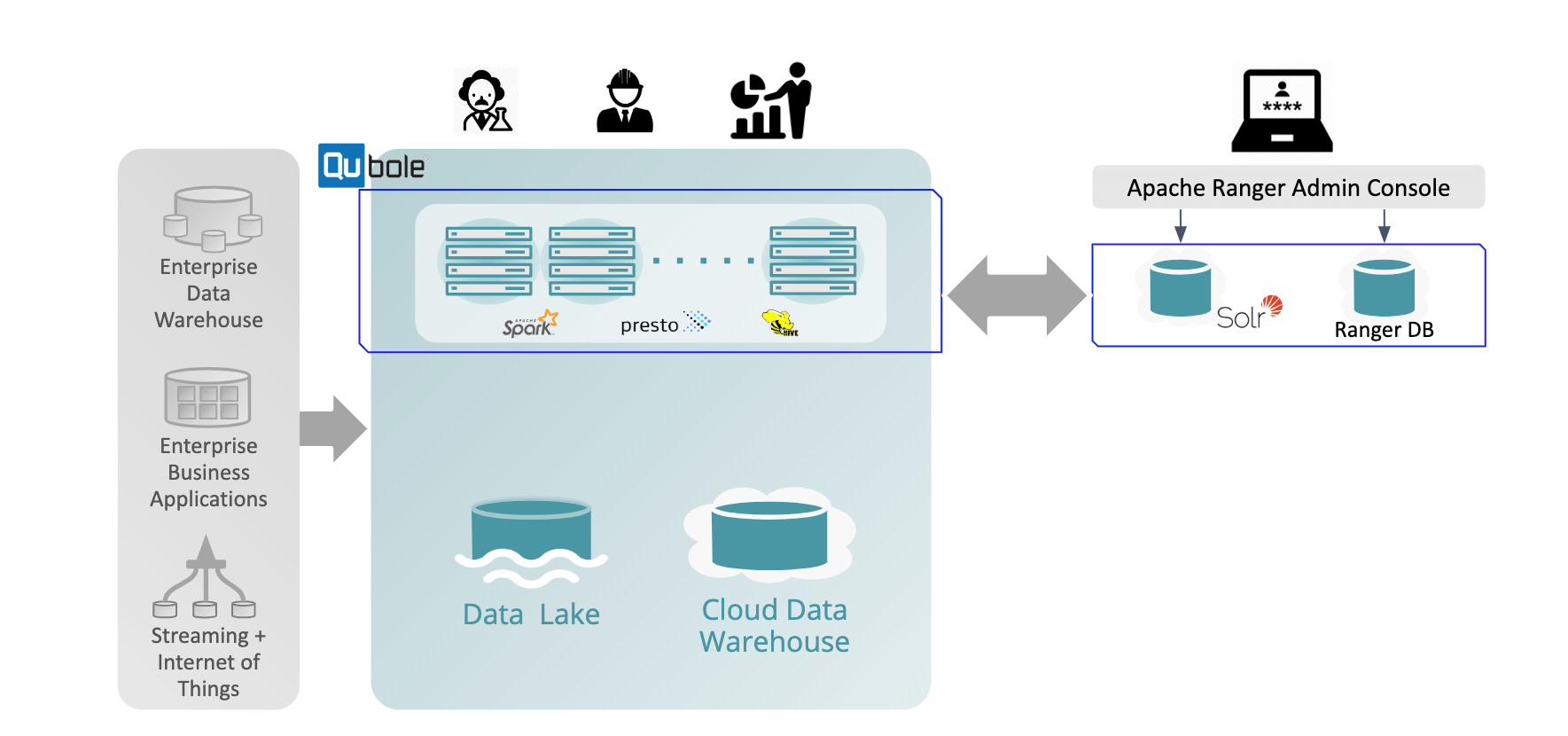

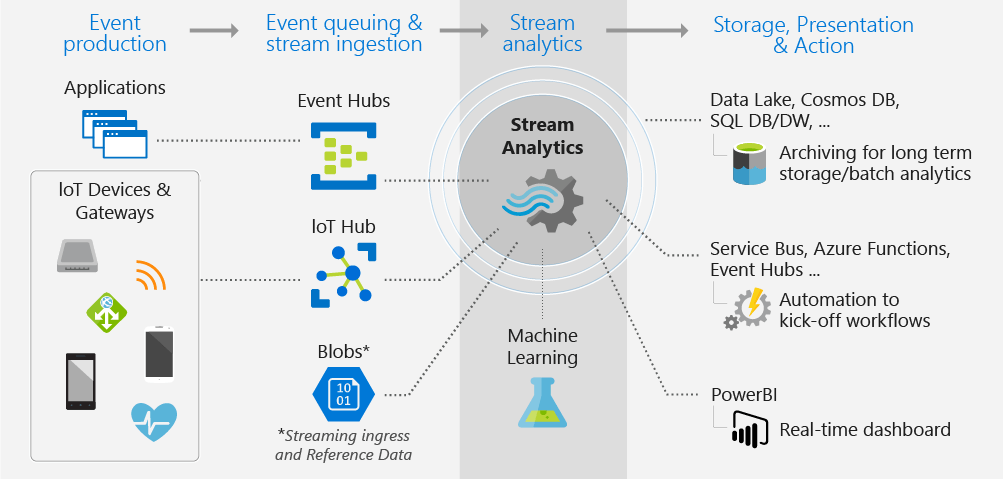

Data lake processing involves one or more processing engines built with these goals in mind and can operate on data stored in a data lake at scale.

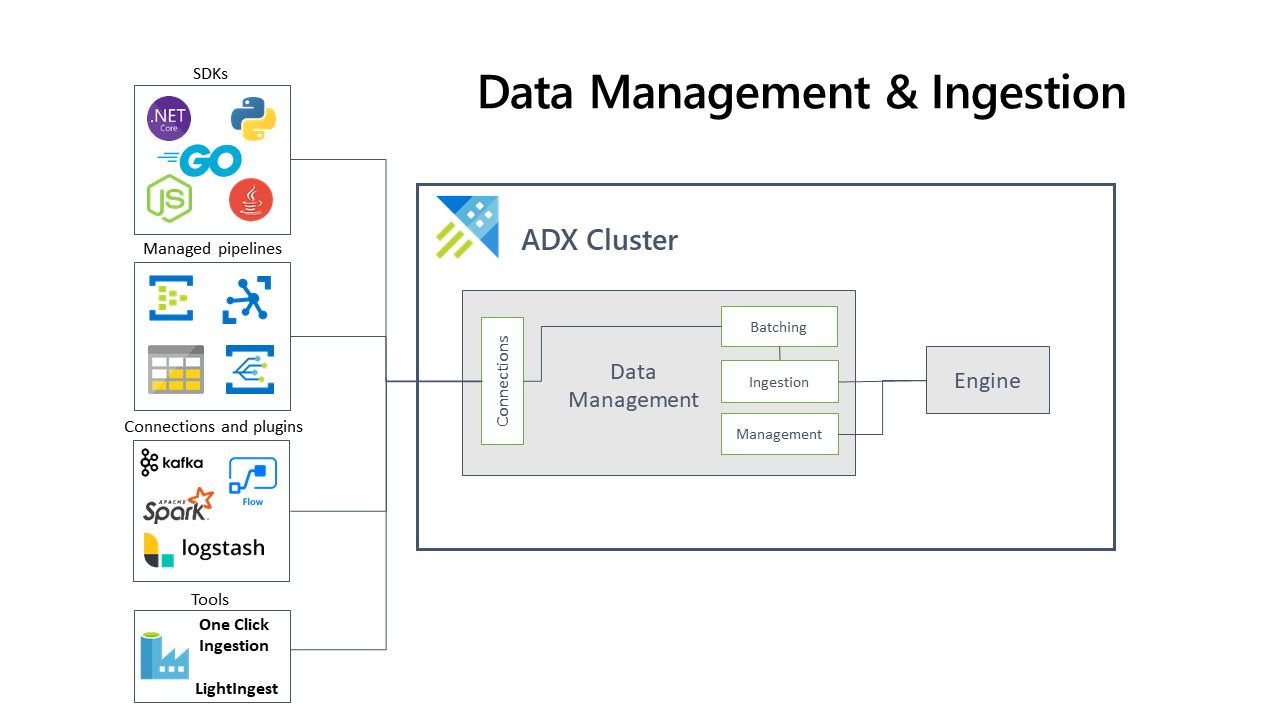

The features that it offers are mentioned below.

Individual solutions may not contain every item in this diagram.

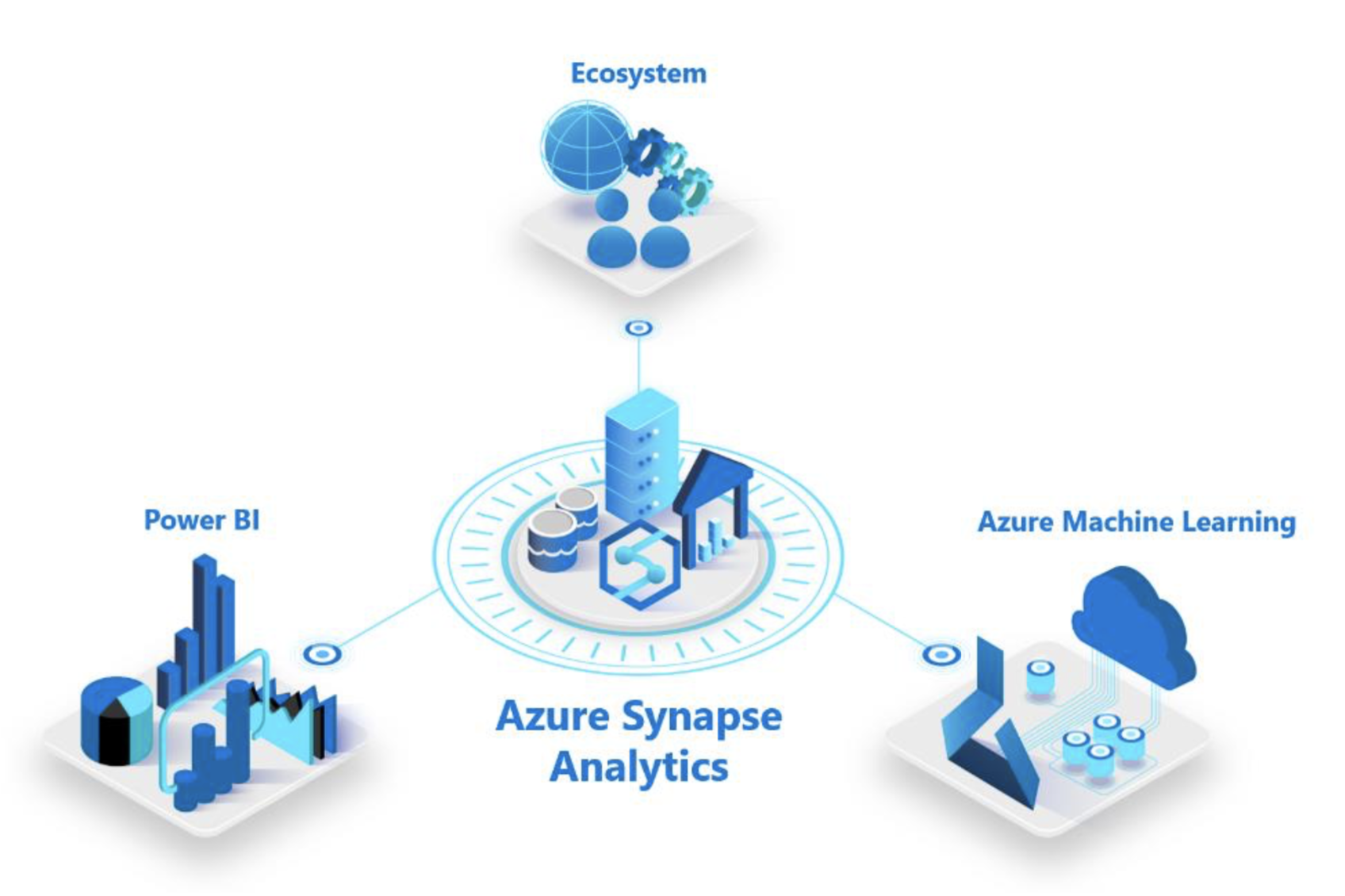

Azure data lake analytics is the latest microsoft data lake offering.

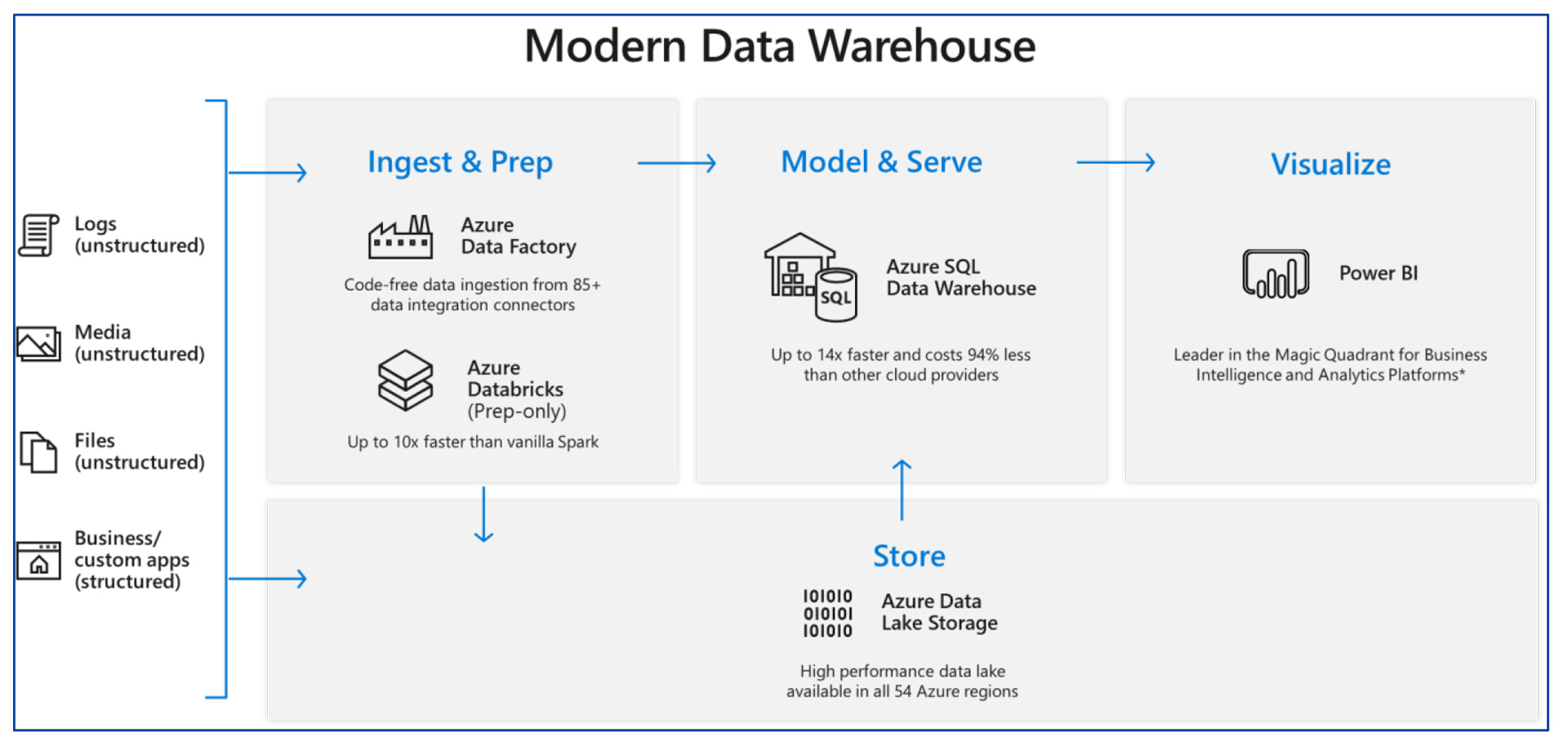

Most big data architectures include some or all of the following components.

Azure data lake includes all the capabilities required to make it easy for developers data scientists and analysts to store data of any size shape and speed and do all types of processing and analytics across platforms and languages.

Options for implementing this storage include azure data lake store or blob containers in azure storage.

The following diagram shows the logical components that fit into a big data architecture.

Components of a big data architecture.

It is an in depth data analytics tool for users to write business logic for data processing.

A data lake can also act as the data source for a data warehouse.

Typical uses for a data lake include data exploration data analytics and machine learning.

All big data solutions start with one or more data sources.

Because the data sets are so large often a big data solution must process data files using long running batch jobs to filter aggregate and otherwise prepare the data for analysis.

The ability to store and analyze data of any kind and size.